Parallel Region

Parallel Construct¶

In this exercise, we will create a parallel region and execute the computational content in parallel. First, however, this exercise is to create a parallel region and understand the threads' behaviour in parallel. In later exercises, we will study how to parallelise the computational task within the parallel region.

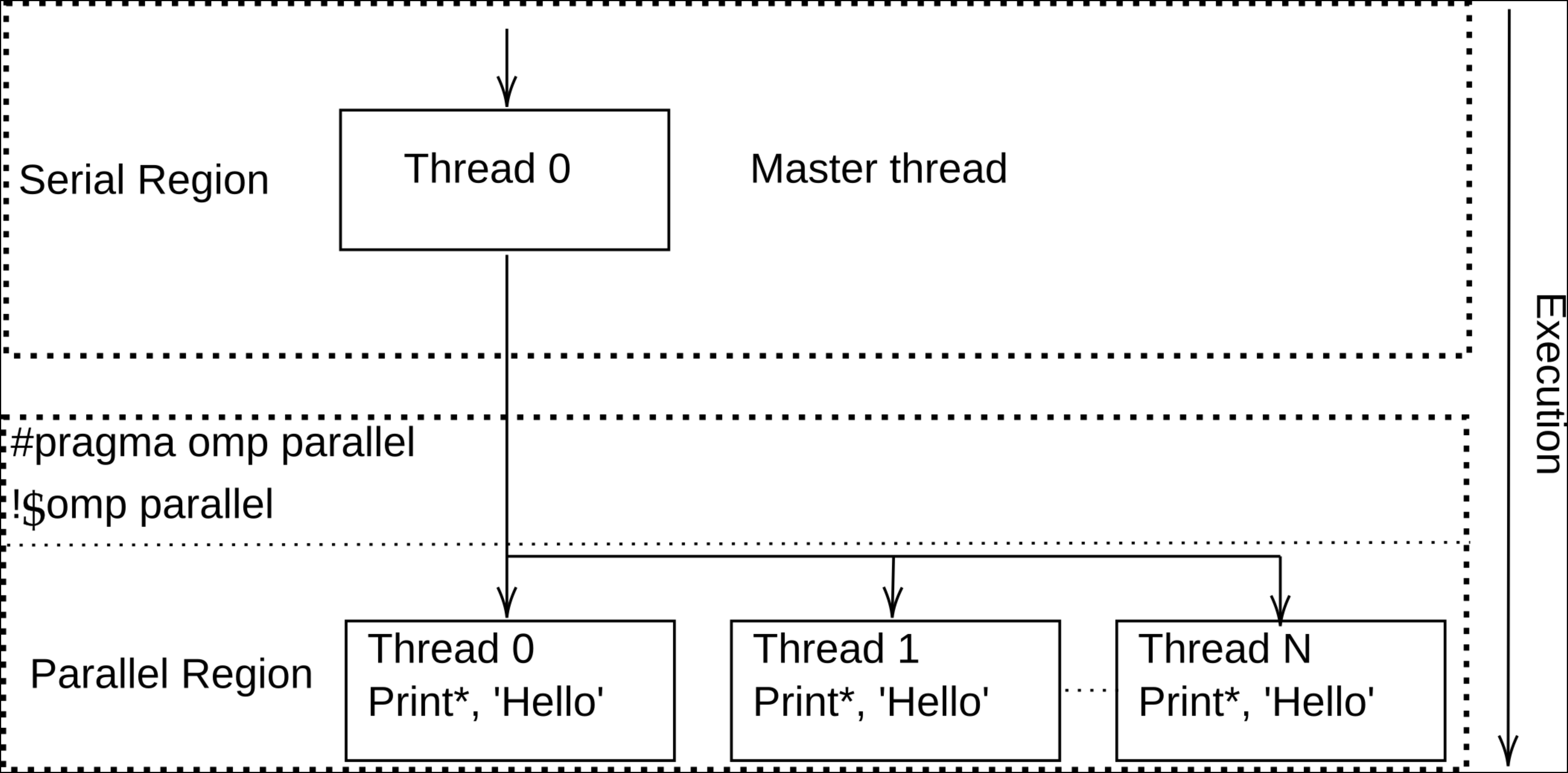

To create a parallel region, we use the following parallel constructs:

The above figure illustrates the parallel region behaviour; as we notice, within the parallel region, we get parallel threads. This means parallel threads can be executed independently of each other, and there is no order of execution.

At the same time, in order to enable OpenMP constructs, clauses, and environment variables. etc., we need to include the OpenMP library as follows:

Compilers¶

The following compilers would support the OpenMP programming model.

- GNU - It is an open source and can be used for Intel and AMD CPUs

- Intel - It is from Intel and only optimized for Intel CPUs

- AOOC - Suitable for AMD CPUs, especially “Zen” core architecture.

Examples (GNU, Intel and AMD): Compilation

Questions and Solutions¶

Examples: Hello World

#include<iostream>

#include<omp.h>

using namespace std;

int main()

{

cout << "Hello world from the master thread "<< endl;

cout << endl;

// creating the parallel region (with N number of threads)

#pragma omp parallel

{

cout << "Hello world from the parallel region "<< endl;

} // parallel region is closed

cout << endl;

cout << "end of the programme from the master thread" << endl;

return 0;

}

Compilation and Output

Questions

- What do you notice from those examples? Can you control parallel region printout, that is, how many times it should be printed or executed?

- What happens if you do not use the OpenMP library,

#include<omp.h> or use omp_lib?

Although creating a parallel region would allow us to do the parallel computation, however, at the same time, we should have control over the threads being created in the parallel region, for example, how many threads are needed for a particular computation, thread number, etc. For this, we need to know a few of the important environmental routines provided by OpenMP. The list below shows a few of the most important environment routines that the programmer should know about for optimised OpenMP coding.

Environment Routines (important)¶

-

Define the number of threads to be used within the parallel region

(C/C++): void omp_set_num_threads(int num_threads); (FORTRAN): subroutine omp_set_num_threads(num_threads) integer num_threads -

To get the number of threads in the current parallel region

(C/C++): int omp_get_num_threads(void); (FORTRAN): integer function omp_get_num_threads() -

To get available maximum threads (system default)

(c/c++): int omp_get_max_threads(void); (FORTRAN): integer function omp_get_max_threads() -

To get thread numbers (e.g., 1, 4, etc.)

(c/c+): int omp_get_thread_num(void); (FORTRAN): integer function omp_get_thread_num() -

To know the number of processors available to the device

(c/c++): int omp_get_num_procs(void); (FROTRAN): integer function omp_get_num_procs()

Questions and Solutions¶

Questions

- How can you identify the thread numbers within the parallel region?

- What happens if you not set

omp_set_num_threads(), for example,omp_set_num_threads(5)|call omp_set_num_threads(5), what do you notice? Alternatively, you can also set the number of threads to be used in the application during the compilationexport OMP_NUM_THREADS; what do you see?

#include<iostream>

#include<omp.h>

using namespace std;

int main()

{

cout << "Hello world from the master thread "<< endl;

cout << endl;

// creating the parallel region (with N number of threads)

#pragma omp parallel

{

//cout << "Hello world from thread id "

<< " from the team size of "

<< endl;

} // parallel region is closed

cout << endl;

cout << "end of the programme from the master thread" << endl;

return 0;

}

#include<iostream>

#include<omp.h>

using namespace std;

int main()

{

cout << "Hello world from the master thread "<< endl;

cout << endl;

// creating the parallel region (with N number of threads)

#pragma omp parallel

{

cout << "Hello world from thread id "

<< omp_get_thread_num() << " from the team size of "

<< omp_get_num_threads()

<< endl;

} // parallel region is closed

cout << endl;

cout << "end of the programme from the master thread" << endl;

return 0;

}

ead id Hello world from thread id Hello world from thread id 3 from the team size of 9 from the team size of 52 from the team size of from the team size of 10

0 from the team size of 10

10

10

10

7 from the team size of 10

4 from the team size of 10

8 from the team size of 10

1 from the team size of 10

6 from the team size of 10

Hello world from thread id 0 from the team size of 10

Hello world from thread id 4 from the team size of 10

Hello world from thread id 5 from the team size of 10

Hello world from thread id 9 from the team size of 10

Hello world from thread id 2 from the team size of 10

Hello world from thread id 3 from the team size of 10

Hello world from thread id 7 from the team size of 10

Hello world from thread id 6 from the team size of 10

Hello world from thread id 8 from the team size of 10

Hello world from thread id 1 from the team size of 10

Utilities¶

The main aim is to do the parallel computation to speed up computation on a given parallel architecture. Therefore, measuring the timing and comparing the solution between serial and parallel code is very important. In order to measure the timing, OpenMP provides an environmental variable, omp_get_wtime().

Time measuring

Created: April 26, 2023 10:45:49